Robust Perception through Equivariance

1Columbia University, 2Rutgers University

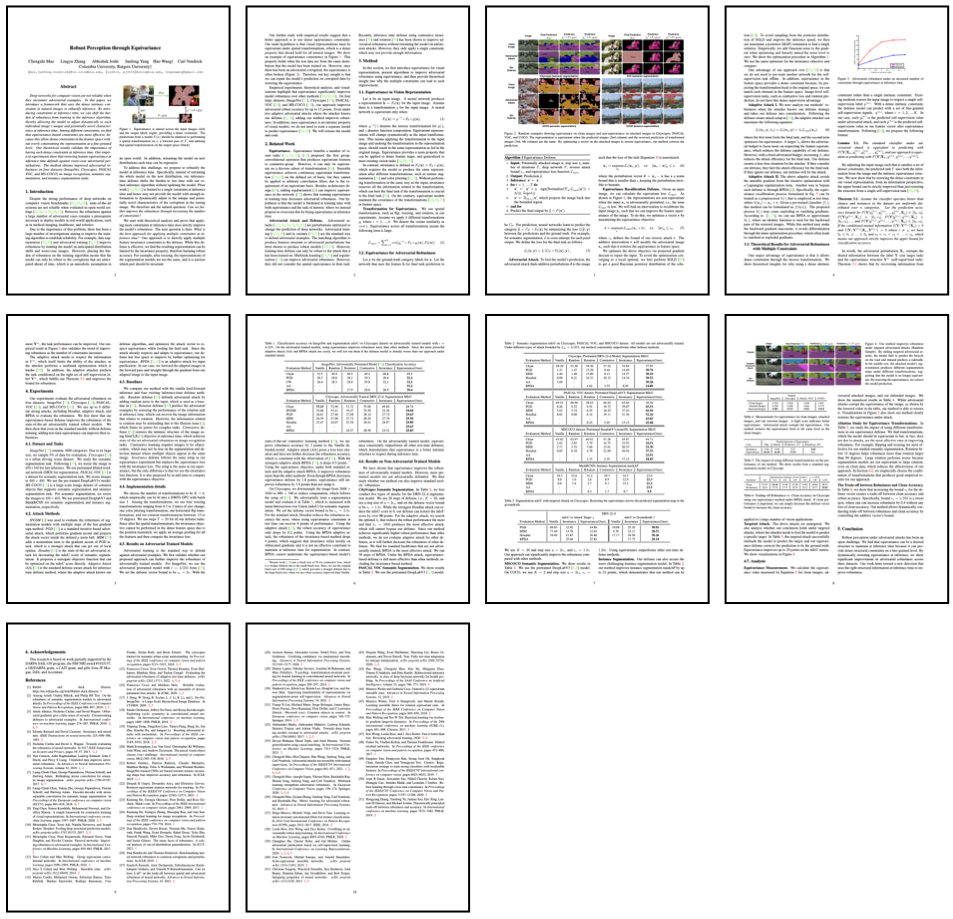

We introduce a framework that uses the dense intrinsic constraints in natural images to robustify inference. Among different constraints, we find that equivariance-based constraints are most effective. For natural images, equivariance is shared across the input images (left) and the output labels (right), providing a dense constraint. The predictions from a model F(x) should be identical to performing a spatial transformation on x, a forward pass of F, and undoing that spatial transformation on the output space (black).

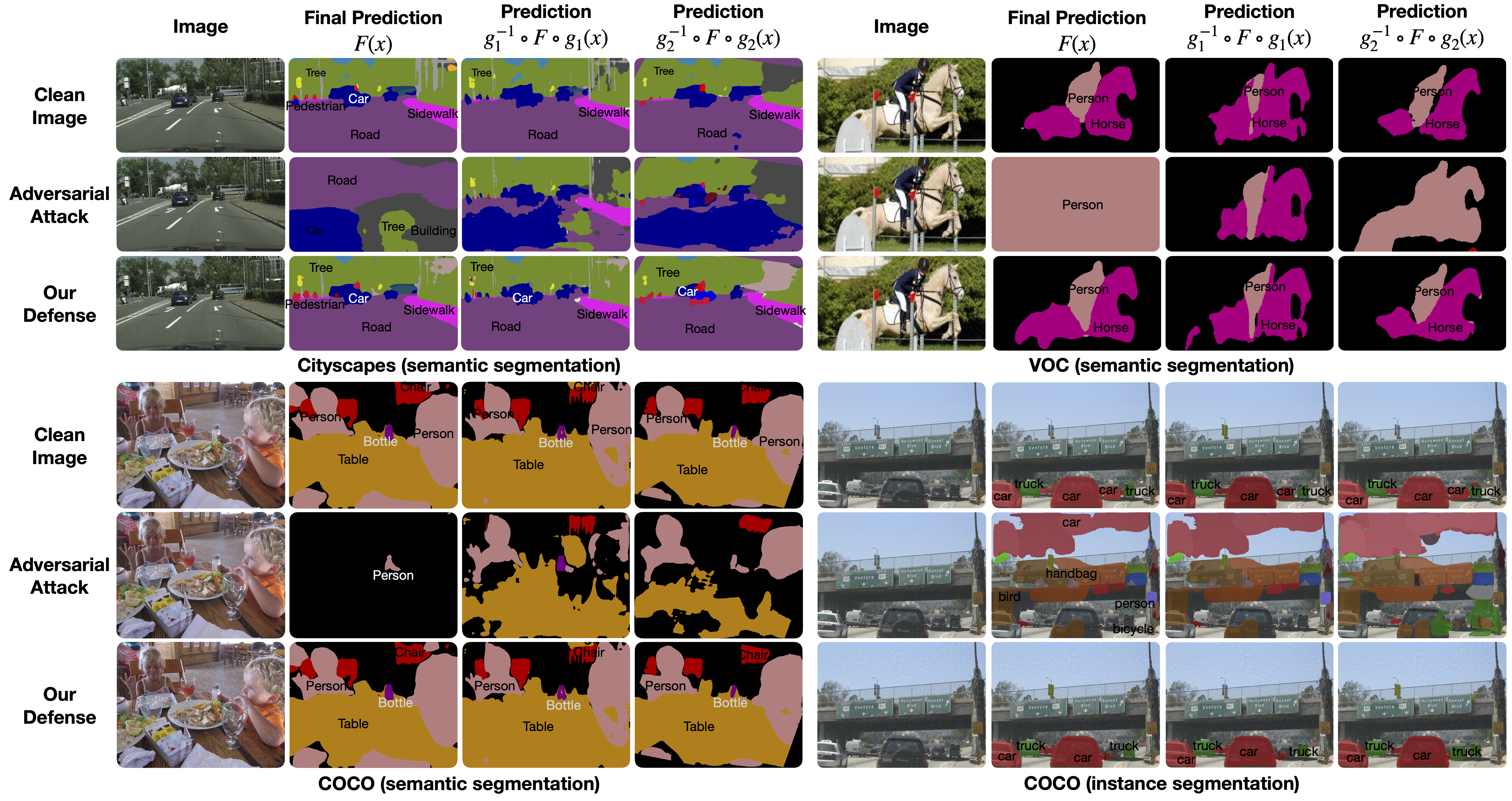

Representations are equivariant when the predicted images (2nd column) and the reversed prediction of transformed images (3rd, 4th column) are the same. Clean images satisify this naturally while attacked images don't. By optimizing a vector on the attacked images to restore equivariance, our method corrects the prediction.

Paper

Abstract

Deep networks for computer vision are not reliable when they encounter adversarial examples. In this paper, we introduce a framework that uses the dense intrinsic constraints in natural images to robustify inference. By introducing constraints at inference time, we can shift the burden of robustness from training to the inference algorithm, thereby allowing the model to adjust dynamically to each individual image's unique and potentially novel characteristics at inference time. Among different constraints, we find that equivariance-based constraints are most effective, because they allow dense constraints in the feature space without overly constraining the representation at a fine-grained level. Our theoretical results validate the importance of having such dense constraints at inference time. Our empirical experiments show that restoring feature equivariance at inference time defends against worst-case adversarial perturbations. The method obtains improved adversarial robustness on four datasets (ImageNet, Cityscapes, PASCAL VOC, and MS-COCO) on image recognition, semantic segmentation, and instance segmentation tasks.BibTeX Citation

@article{mao2022robust,

title={Robust Perception through Equivariance},

author={Mao, Chengzhi and Zhang, Lingyu and Joshi, Abhishek

and Yang, Junfeng and Wang, Hao and Vondrick, Carl},

journal={arXiv preprint arXiv:2212.06079},

year={2022}

}

Acknowledgements

This research is based on work partially supported by the DARPA SAIL-ON program, the NSF NRI award \#1925157, a GE/DARPA grant, a CAIT grant, and gifts from JP Morgan, DiDi, and Accenture. The webpage template was inspired by this project page.